Physicist Warns That AI Consumes Energy Exponentially While Giants Transfer Risks - Why These 24 Hours Reveal the Crossroads Between Sustainability and Accelerated Growth

December 30, 2025 | by Matos AI

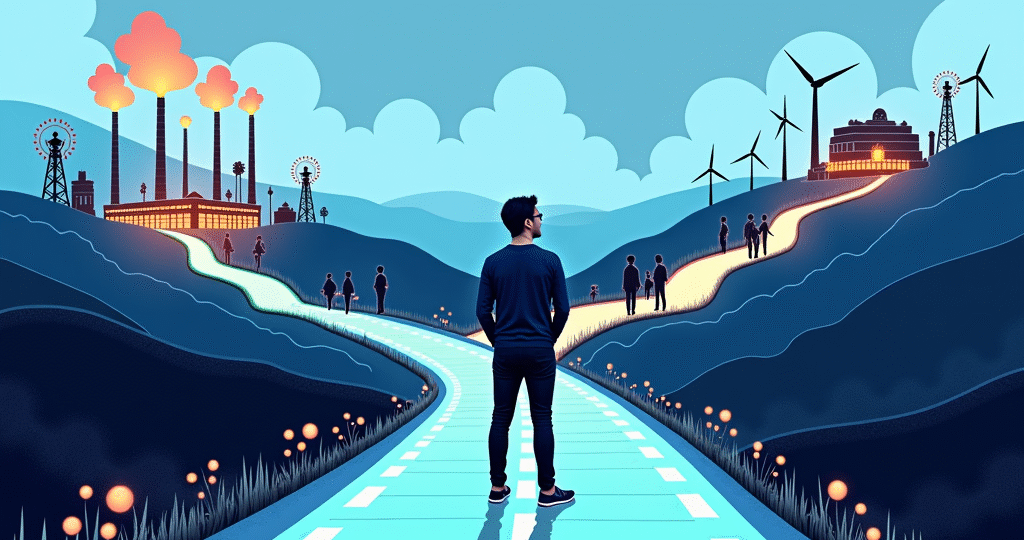

We are at a crossroads. It's no longer a choice between adopting AI or not - that's already been made. The question now is: which path will we take? That of machines that consume resources exponentially while the financial and environmental risks are transferred to the most vulnerable, or that of consciously building a technology that serves humanity without destroying the planet?

The last 24 hours have brought a series of news items that, taken together, expose exactly this tension. On the one hand, physicist Mario Rasetti warns that AI is developing at a “doubly exponential” rate and consuming energy and water at unsustainable levels. On the other, companies like Meta and Microsoft are transferring the risks of this race for smaller investors through sophisticated financial structures.

About that, the book Atlas of AI documents the extractive trail of this industry, and experts debate how to mitigate inequalities in the job market.

Join my WhatsApp groups! Daily updates with the most relevant news in the AI world and a vibrant community!

- AI for Business: focused on business and strategy.

- AI Builders: with a more technical and hands-on approach.

It's not catastrophism. It's realism. And it's also an opportunity to choose the right path.

The Fastest Revolution in History and Its Invisible Cost

Mario Rasetti, world-renowned physicist and professor emeritus at the Politecnico di Torino, doesn't mince words. For him, AI represents “perhaps the greatest cultural revolution in the entire history of the Homo sapiens“ - an “anthropological transition”.

But unlike other technological revolutions, this one is happening at “double exponential” speed. What does this mean in practice? That with each cycle, growth not only doubles, but accelerates at an ever faster pace. It's as if we were in a car whose accelerator is increasing its own power every second.

And here's the problem: the physical infrastructure does not keep up with this speed.

Rasetti specifically warns of two critical costs:

- Energy: Data centers that process AI consume monumental amounts of electricity. He cites the case of Google's centers in Ireland and Microsoft's investments in Three Mile Island (yes, the same nuclear power plant that had the famous accident in 1979).

- Water: Cooling these servers consumes water on an industrial scale, having a direct impact on regions already affected by water scarcity.

I've been working with companies and governments for years on developing AI strategies, and one question I always get is: “Do you know how much it costs in environmental terms to run this model?” The answer, more often than not, is silence.

Not because people are irresponsible, but because this information is deliberately made invisible. The cloud seems light, but as Kate Crawford documents in Atlas of AI, It is sustained by a brutal extractive chain.

The Unsustainable Lightness of the Cloud

Kate Crawford, an internationally recognized researcher, dismantles the myth of “clean” technology. Her book shows that AI relies on:

- Rare mineral extraction: Lithium, cobalt, rare earths - essential elements for supercomputers that are extracted in often precarious conditions, changing entire landscapes.

- Global supply chains: A logistics chain that connects mines in Africa to factories in Asia and data centers in the US and Europe.

- Exponential energy consumption: AI has become one of the biggest energy consumers on the planet.

And then there are the social costs: privacy constantly mined, algorithms that reproduce historical prejudices, and a concentration of power in the hands of an ever smaller technological elite.

The point is not to abandon AI. It's about demanding that it be built in a sustainable and democratic way. In my work with companies, I always reinforce this: Responsible AI is not just an ethical issue - it's a question of long-term viability.

How Giants Transfer Risk (And Why It Matters)

While environmental costs are being made invisible, financial risks are being transferred in increasingly sophisticated ways.

A New York Times report published on InfoMoney exposes how Meta and Microsoft use complex financial structures to expand data centers without taking on debt directly on their balance sheets.

Meta, for example, has created a special-purpose vehicle called Beignet Investor LLC. Through it, it issued bonds that mature in 2049 (!), transferring the risk to private creditors such as Blue Owl Capital and Pimco.

The strategy is simple (and ingenious, from a financial point of view):

- Renting computing capacity from third parties

- Wait for demand to be confirmed

- Only then make a long-term financial commitment

- If the boom slows down, exit agreements by classifying costs as operating expenses

Who takes the loss? Smaller companies, suppliers and investors who have taken on long-term infrastructure.

As Shivaram Rajgopal, professor at Columbia Business School, said: “Risk is like a tube of toothpaste. You squeeze it here, it comes out somewhere else.”

It reminds me of the accounting structures that preceded the dotcom bubble and the 2008 crisis. The difference is that now the asset in question is not just mortgages or shares in internet companies, but the AI computing infrastructure itself.

Experts compare these arrangements to outdated methods that add opacity in financing. And when there is opacity, there is a systemic risk.

What Does This Mean for the Innovation Ecosystem?

If you are an entrepreneur, executive or investor, pay attention: the concentration of risks in smaller players can create a domino effect.

Imagine a Brazilian startup that has developed a promising AI solution and needs computing power. It can:

- Renting from a large cloud (which may readjust prices or withdraw from the agreement)

- Invest in your own infrastructure (by taking on long-term debt)

- Dependence on private credit (with high interest rates and transferred risks)

While the giants have the flexibility to navigate these options, startups and medium-sized companies are exposed.

In my mentoring work with executives and companies, I always reinforce this: It's not enough to have a good AI idea. You need to understand the cost and risk structure of the infrastructure that supports it.

The Crossroads: Three Possible Paths

Rasetti uses a powerful metaphor: we are at a crossroads. And like every crossroads, we have to choose a path.

Path 1: Accelerate Without Limits

Continuing the exponential race, ignoring environmental and social costs, transferring risks to the most vulnerable. It's the path of growth at any cost.

Consequence: Environmental collapse, systemic financial crises, brutal widening of inequalities.

Path 2: Radical braking

Imposing moratoriums, restricting development, treating AI as an existential threat. It's the path of fear.

Consequence: Loss of real opportunities to solve complex problems, competitive backwardness, concentration of power in the countries that didn't brake.

Path 3: Turning AI from Practice into Science

This is Rasetti's proposal - and the one that makes the most sense. Deep understanding of technology, This means establishing clear ethical and environmental limits, democratizing access, and building responsible governance.

Consequence: Sustainable, inclusive AI that serves humanity without destroying the planet.

I choose the third way. And I believe that most people, when they understand the real options, choose it too.

AI Is Not Creative, It Has No Conscience - And That's Fundamental

Rasetti makes a point of reaffirming something we often forget in the hype: intelligent machines will never have feelings or consciousness.

AI represents reality, but not understands. It's like Plato's allegory of the cave: we see shadows projected on the wall, but not reality itself.

And more:

- AI is not creative: It reorganizes existing patterns, but does not genuinely create new knowledge.

- He doesn't understand the deeper meaning: It can process language, but it doesn't understand human experience.

- They don't know how to correct themselves: It depends on human intervention for ethical and contextual adjustments.

Rasetti's conclusion is comforting and challenging at the same time: “We human beings are much more powerful than machines.”

But that also means that the responsibility lies with us.

In my work with companies, one of the main transformations I seek is precisely this change in mentality: from “AI will solve everything” to “AI is a powerful tool that needs strategic and ethical human guidance”.

The Impact on the World of Work: Between Opportunity and Inequality

An article published in JOTA addresses exactly this tension: AI transforms the labor market by creating new jobs and making others obsolete, but the pace is much faster than our ability to adapt.

The main concern is not whether AI will create or destroy jobs - it will do both. The question is: who will have access to the necessary retraining?

Three Strategies That Work

The article highlights three proven ways to mitigate inequalities:

1. Digital skills training

Successful examples in Kenya show that proper training generates real income growth. It's not theory - it's empirical evidence.

2. Reskilling within companies

Companies that adopt AI and reorganize functions (instead of just cutting jobs) generate significant salary gains for those who are retrained. And watch out: socio-emotional skills are increasingly valued.

AI can process data, but it can't negotiate conflicts, lead diverse teams, or understand the cultural nuances of a market.

3. AI-facilitated inclusion

AI tools for creating CVs have increased the chances of less qualified candidates being hired by 8%. Technology can democratize access - when applied well.

The risk: Screening algorithms can reproduce historical prejudices. If the training data reflects past discrimination, AI amplifies that discrimination.

That's why I always reinforce it: Responsible AI requires constant auditing, diversity in development teams, and transparency in criteria.

Rethinking Social Security

The article raises a crucial point: it will be necessary to rethink social security in order to accommodate the gig economy and flexible working.

The traditional model of formal employment with a formal contract is being complemented (and in some cases replaced) by more fluid arrangements. This requires integrated public policies that protect workers without stifling innovation.

It's a complex debate, but one that can't be postponed.

Curious Cases That Reveal Deep Trends

The news of the last 24 hours has also brought seemingly minor cases, but which reveal important trends.

Autonomous Cars That “Read the Minds” of Pedestrians

Researchers from the USA and South Korea have developed OmniPredict, an AI that predicts pedestrian behavior in real time with 67% accuracy - 10% more than previous models.

The system analyzes 16 video frames (half a second) to predict the action 30 frames ahead (about a second). It doesn't sound like much, but is enough for a car to plan maneuvers in advance, This makes traffic run more smoothly and reduces sudden stops.

The innovation lies in the use of zero-shot learning and language models to “reason” about human intentions. Instead of just reacting, the vehicle anticipates.

Limitations: Difficulties with strong shadows and differentiating between pedestrians and cyclists in certain conditions.

This perfectly illustrates what Rasetti says: AI represents, but it doesn't understand. It identifies patterns of behavior, but it doesn't understand why someone acts in a certain way.

Fake AI Videos and the Crisis of Confidence

A viral video which claimed to show a Military Police “views factory” inflating hits on YouTube - reaching 1.2 million views on X - was created using AI.

Although click farms are a real scam, the use of AI to produce videos about them raises concerns about layered disinformation.

It's not just the fake content that's worrying, but the AI's ability to generate credible material about real fraudulent practices, creating confusion between what is genuine and what is manufactured.

Repression is hampered by the lack of specific legislation in many countries. And here we come back to the crossroads: we need governance.

TJRJ Spends R$ 518 Thousand on AI Course in Italy

Twenty-three TJRJ judges attended a course on “Law, Justice and Artificial Intelligence” at the University of Milan, costing the public coffers R$ 518,000.

The news has generated controversy, but I see two sides:

Positive side: Judges do need to understand AI in order to judge cases involving the technology. Regulation, data protection, the ethical challenges of judicial automation - these are key issues.

Problematic side: The cost and opacity. Are there courses of equivalent quality in Brazil? Why choose an expensive international course? What is the real impact of this investment on legal application?

The TJRJ is planning three more similar courses in Europe in 2026. Transparency and accountability are essential, especially when it comes to public resources.

What to do at this crossroads?

So we come back to the initial question: which path to choose?

I believe that there are five fundamental principles on the right path:

1. radical transparency in environmental costs

Companies need to disclose the energy and water consumption of their AI models. Not as marketing, but as a mandatory metric. Just as we have nutritional labels on food, we need “environmental labels” on AI.

2. Strict Financial Governance

Structures that transfer risks in an opaque way must be regulated. Not to make innovation unviable, but to protect the ecosystem as a whole.

3. Massive investment in upgrading

Companies, governments and support organizations need to create accessible and practical training programs. There's no point in talking about the “future of work” without preparing people for it.

4. Ethical Auditing of Algorithms

AI systems used in critical decisions (hiring, credit, justice) should be regularly audited by diverse and independent teams.

5. Conscious leadership

Executives and entrepreneurs need to understand that AI is not magic. It's powerful technology that requires informed strategic decisions, social responsibility and long-term vision.

As Rasetti emphasizes: we human beings are more powerful than machines. But only if we exercise this leadership consciously.

The Choice is Ours - And the Time is Now

The last 24 hours have brought a clear picture of the crossroads we are at. On the one hand, technology is developing exponentially, consuming resources and transferring risks. On the other, the possibility of building sustainable, inclusive and responsible AI.

The choice is not between having or not having AI. It's between having AI that serves humanity or having humanity that serves AI.

Rasetti reminds us that we are living through perhaps the greatest cultural revolution in human history. Crawford warns us about the hidden costs. Experts point to concrete ways to mitigate inequalities.

My question to you is: which path will you and your organization choose?

In my mentoring work with executives and companies, I help navigate exactly this crossroads - translating technical complexity into applicable strategy, connecting innovation with responsibility, and building real organizational capacity to take advantage of AI without falling into the traps of hype or extractivism.

Because in the end, the most powerful AI isn't the one that processes the most data. It's the one that serves a clear purpose, is led by conscious people, and is built to last.

And this way, unlike algorithms, you can't build it alone.

✨Did you like it? You can sign up to receive 10K Digital's newsletters in your email, curated by me, with the best content about AI and business.

➡️ Join the 10K Community here

RELATED POSTS

View all